How to Measure Data Quality: Building a Clarity Scorecard

"How's our data quality?" is one of those questions that usually gets answered with a shrug or a vague "pretty good, I think."

That's a problem. If you can't measure data quality, you can't improve it. You can't prove to stakeholders that your cleanup efforts are working. And you definitely can't catch things getting worse before they cause real damage.

A Clarity Score fixes this. It's a single number—0 to 100—that tells you how clean your dataset is right now. Not a feeling. Not a guess. A metric you can track, report on, and hold yourself accountable to.

This post walks through how to build one: what goes into the score, how to weight the components, and how to turn it into something you actually use.

What Is a Clarity Score?

A Clarity Score is a composite metric that combines multiple data quality dimensions into a single number. Think of it like a credit score for your data—one figure that summarizes a lot of underlying complexity.

The score runs from 0 (your data is a disaster) to 100 (your data is pristine). Most real-world datasets land somewhere between 60 and 85. Below 60, you've probably got serious problems affecting downstream work. Above 90, you're doing better than most.

Why bother with a single score when you could just track individual metrics? A few reasons:

- Communication. Executives don't want to hear about your duplicate rate, completeness percentage, and anomaly count separately. They want to know: is the data good or not? A single score answers that question.

- Trending. Individual metrics bounce around. One week your duplicate rate spikes because of a bad import, the next week it's fine. A composite score smooths out the noise and shows the real direction.

- Accountability. "Improve data quality" is vague. "Get the Clarity Score from 72 to 85 by end of quarter" is a goal you can actually work toward.

The score isn't magic—it's just math. But turning messy reality into a number forces you to define what "good" means, and that clarity is half the battle.

The Four Components

A good Clarity Score draws from four dimensions. You could add more, but these cover the problems that actually matter for most business data.

Completeness

Completeness measures how many of your required fields actually have values. If your customer records should have email addresses and 15% of them are blank, that's a completeness problem.

The tricky part is defining "required." Not every field matters equally. A missing middle name is fine. A missing email address on a marketing list makes the record nearly useless.

Start by identifying your critical fields—the ones where a blank value means the record can't serve its purpose. Then calculate:

Completeness % = (Records with all critical fields populated / Total records) × 100

For most B2B datasets, you'd flag fields like: primary email, company name, and at least one phone or address. The specific list depends on what you're using the data for.

Consistency

Consistency measures whether data follows expected formats and patterns. Phone numbers in six different formats? That's a consistency problem. States written as "California," "CA," and "Calif" in the same column? Also consistency.

Unlike completeness, consistency issues don't always break things. A phone number written as "(555) 123-4567" versus "555-123-4567" is still a valid phone number. But inconsistency creates friction—it makes data harder to search, match, and analyze.

Consistency % = (Records matching format standards / Total records) × 100

Define your standards first. Pick a phone format (E.164 international is a good default). Pick a date format (ISO 8601). Pick title case or lowercase for names. Then measure against those standards.

Duplicate Rate

Duplicates are records that represent the same real-world entity but appear multiple times in your dataset. They're one of the most common and most damaging data quality issues.

The classic problem: "John Smith" at Acme Corp exists three times—once as "John Smith," once as "Jon Smith" (typo), and once as "J. Smith" (abbreviation). Your CRM thinks these are three different people. Your sales team might contact them three times. Your analytics counts them three times.

Duplicate Score = 100 - (Duplicate records / Total records × 100)

Note that this inverts the metric. A 5% duplicate rate becomes a 95 duplicate score. This keeps all components on the same scale where higher is better.

Detecting duplicates isn't trivial—you need fuzzy matching or semantic similarity to catch the "John" vs "Jon" cases. But even a basic exact-match check on email addresses will find a lot of them.

Anomaly Rate

Anomalies are values that don't make sense: a customer aged 250, an order total of negative $500, a date in the year 2087. They're either data entry errors, system glitches, or (occasionally) fraud.

Some anomalies are obvious violations of business rules. Others are statistical outliers—values that fall far outside the normal range. Both types matter.

Anomaly Score = 100 - (Records with anomalies / Total records × 100)

Again, inverted so higher is better.

What counts as an anomaly depends on your data. Age should probably be 0-120. Prices probably shouldn't be negative. Dates should be within reasonable bounds. You'll need to define the rules for your specific context.

Weighting the Components

Not all quality dimensions matter equally for every dataset. A marketing email list cares a lot about completeness (need those email addresses) and duplicates (don't email the same person twice). Anomaly detection matters less—a weird job title isn't going to break your campaign.

Financial data flips this around. Anomalies are critical (you really need to catch that negative transaction). Completeness might matter less if partial records are still useful for analysis.

Here's a default weighting that works for most general-purpose business data:

| Component | Weight | Rationale |

|---|---|---|

| Completeness | 30% | Missing data limits what you can do with records |

| Consistency | 20% | Format issues cause friction but rarely break things |

| Duplicates | 30% | Duplicates directly waste time and skew analytics |

| Anomalies | 20% | Bad values can mislead decisions if undetected |

The formula becomes:

Clarity Score = (Completeness × 0.30) + (Consistency × 0.20) + (Duplicate Score × 0.30) + (Anomaly Score × 0.20)

Adjust the weights for your use case. If you're prepping data for a machine learning model, consistency might matter more (models hate inconsistent formats). If you're cleaning a CRM before a sales campaign, duplicates should probably weight higher.

Just make sure the weights add to 100%. And document why you chose them—future you will forget.

Setting Thresholds

A score is only useful if you know what it means. Here's a general interpretation scale:

| Score Range | Interpretation | Action |

|---|---|---|

| 90-100 | Excellent | Maintain current practices |

| 80-89 | Good | Address specific issues as time allows |

| 70-79 | Acceptable | Prioritize cleanup in your next cycle |

| 60-69 | Concerning | Immediate attention needed |

| Below 60 | Critical | Stop using this data until cleaned |

These thresholds aren't universal. A 75 might be fine for an internal contact list but unacceptable for data feeding a financial model. Calibrate based on the consequences of bad data in your specific context.

The more important thing is having thresholds at all. "Our score dropped from 82 to 71" tells you something changed. "Our score dropped below our 75 threshold" tells you action is required.

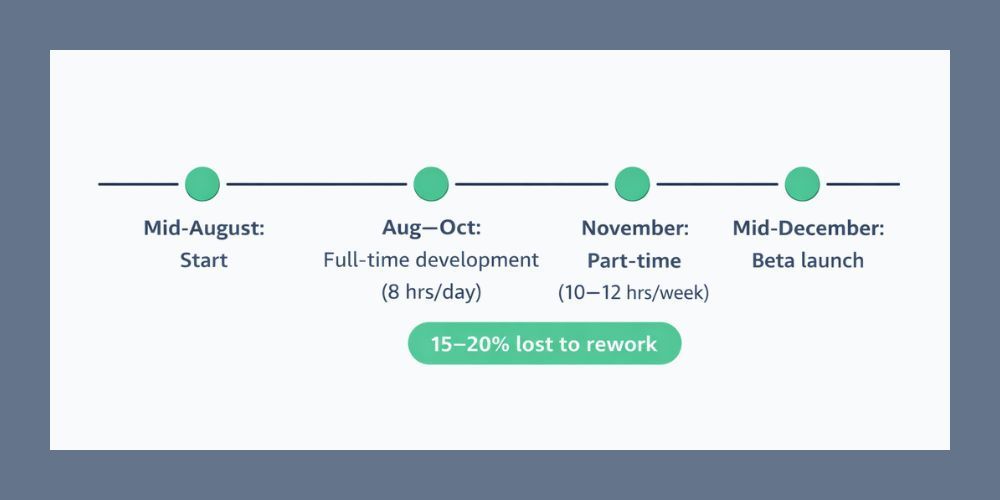

Tracking Clarity Over Time

A point-in-time score is useful. A trend is more useful.

Calculate your Clarity Score on a regular cadence—weekly is good for active datasets, monthly works for more stable ones. Plot it on a chart. Look for patterns.

Things to watch for:

- Gradual decline. Small drops each week often indicate a systematic problem—maybe a data entry process that's drifting, or an integration that's slowly corrupting records. Easier to fix early than after six months of degradation.

- Sudden drops. Usually traceable to a specific event: a bad import, a system migration, a process change. Find the cause and you can often reverse it.

- Improvement plateaus. You've fixed the easy stuff and the score stopped climbing. Time to dig into the harder problems or accept the current level as your baseline.

- Seasonal patterns. Some businesses see data quality dip during busy periods when people rush through data entry. Knowing this helps you plan cleanup efforts.

Reporting on trend is more persuasive than reporting on absolute scores. "We've improved from 68 to 81 over the past quarter" demonstrates progress in a way that "our score is 81" doesn't.

Your Clarity Scorecard Template

Here's a scorecard format you can adapt:

| Metric | Current | Target | Weight | Weighted Score |

|---|---|---|---|---|

| Completeness | % | 95% | 30% | |

| Consistency | % | 90% | 20% | |

| Duplicate Score | % | 97% | 30% | |

| Anomaly Score | % | 98% | 20% | |

| Clarity Score | 85+ | 100% |

Fill in the current values, calculate the weighted scores, and sum them up. Compare against targets. Repeat next period.

The targets in this template are reasonable defaults for business data. Adjust them based on what's achievable and what matters for your use case.

Putting It Into Practice

Building a Clarity Score isn't complicated, but it does require some upfront work:

- Define your critical fields for completeness measurement

- Set your format standards for consistency checking

- Run duplicate detection on your key identifier fields

- Establish anomaly rules for numeric and date fields

- Choose your weights based on what matters most

- Calculate the baseline so you know where you're starting

- Set a target and a timeline to get there

CleanSmart calculates all four components automatically when you upload a dataset. You get a Clarity Score without building spreadsheets or writing scripts—just upload and see where you stand.

How often should I recalculate the Clarity Score?

Depends on how fast your data changes. For active operational databases with daily updates, weekly calculation makes sense. For more stable datasets—like an annual customer list—monthly is probably enough. The goal is catching problems before they compound, so err on the side of more frequent if you're unsure.

What if my score is really low?

Don't panic, and don't try to fix everything at once. Look at which component is dragging the score down most and focus there first. A 55 Clarity Score with a 40% duplicate rate tells you exactly where to start. Fix duplicates, recalculate, reassess. Incremental improvement beats paralysis.

Should I weight all datasets the same way?

Not necessarily. A marketing contact list and a financial transaction log have different quality priorities. It's fine to have different weighting schemes for different data types—just document them so you're comparing apples to apples when you report across datasets.

William Flaiz is a digital transformation executive and former Novartis Executive Director who has led consolidation initiatives saving enterprises over $200M in operational costs. He holds MIT's Applied Generative AI certification and specializes in helping pharmaceutical and healthcare companies align MarTech with customer-centric objectives. Connect with him on LinkedIn or at williamflaiz.com.