CSV Validation Rules Every Team Should Enforce

Every bad data problem I've seen in the past year started the same way: someone imported a CSV without checking it first.

The file looked fine. It opened in Excel. It had the right columns. So it went straight into the system, and three weeks later someone's asking why the quarterly numbers don't add up, or why 200 customers have the same phone number, or why there's a negative value in a field that should never be negative.

Validation rules are the fix. Not complicated ones—just a checklist of things that should be true about any file before it touches your production data. Catch the problems at the door instead of finding them later in a broken report.

This post covers the rules worth enforcing on every CSV import, from basic structure checks to field-specific validation. Steal these defaults and adapt them to your data.

Why Validation Rules Matter

The argument for validation isn't abstract. It's about time and trust.

Time: Every data quality issue you catch at import is an issue you don't have to investigate, fix, and explain later. The ratio isn't even close—five minutes of validation saves hours of cleanup.

Trust: When stakeholders learn that bad data got into reports, they stop trusting the reports. Even after you fix the issue, the doubt lingers. "Are we sure this number is right?" is a question that kills momentum.

Validation rules are a forcing function. They make it impossible to import garbage without at least acknowledging you're doing it. That friction is the point.

Required Columns and Types

The most basic validation: does this file have what we expect?

Column presence: Define the columns that must exist. If your customer import expects "email" and "company_name" and the file has "e-mail" and "company," that's a problem to catch now, not after the import silently maps things wrong or drops data.

Column order: Decide whether order matters. Some systems require exact column positions; others match by header name. Know which you're dealing with and validate accordingly.

Data types: Each column should have an expected type. Is this field supposed to be a number? A date? Text? A value that's technically text but contains "$45.99" in a numeric field will break calculations downstream.

Type validation catches:

- Numbers stored as text ("1,234" vs 1234)

- Dates in the wrong format (or not dates at all)

- Empty strings where nulls should be

- Mixed types in a single column

The rules don't have to be fancy. "Column A must exist and contain only integers" is a rule. Write it down, check it on every import.

Allowed Values and Ranges

Once you know the columns exist and have the right types, check whether the values make sense.

Enumerated values: If a field should only contain specific options, validate against the list. Status fields are classic—if your system expects "active," "inactive," and "pending," a value of "Active" (capitalized) or "on hold" (not in the list) shouldn't get through.

Numeric ranges: Define the reasonable bounds. Age should be 0-120. Percentages should be 0-100. Order quantities should be positive. Prices probably shouldn't be negative (unless you handle credits).

String length: Set minimums and maximums. A two-character "name" field is probably wrong. A 10,000-character "notes" field might break your UI. Phone numbers should be within a reasonable digit range.

Regex patterns: For structured text, define the pattern. US zip codes are 5 digits or 5+4 with a hyphen. URLs should start with http:// or https://. Product codes probably follow a specific format.

Here's a starter set of range rules:

| Field Type | Rule |

|---|---|

| Age | 0-1,200, integer |

| Percentage | 0-100 |

| Price/Amount | >=0 (usually) |

| Quantity | >0, integer |

| Year | 1900-2100 |

| Rating (1-5) | 1,2,3,4, or 5 only |

Adjust for your domain. The point is to have explicit rules rather than hoping the data is reasonable.

Date, Phone, and Email Rules

These three fields cause disproportionate pain. They deserve specific attention.

Dates are a mess because formats vary. Is "01/02/2024" January 2nd or February 1st? Depends who created the file. Your validation should either enforce a specific format (ISO 8601: YYYY-MM-DD is the least ambiguous) or at least flag ambiguous dates for review.

Date validation rules:

- Must be parseable as a date

- Must be within a reasonable range (not year 1900 unless you actually have historical data, not year 2099 unless you're scheduling far-future events)

- Start dates must be before end dates

- Dates shouldn't be in the future if they represent past events (order dates, birth dates)

Phone numbers vary by country and format. At minimum, check that they contain only valid characters (digits, spaces, parentheses, hyphens, plus signs) and fall within a reasonable length (7-15 digits typically). If you're US-only, you can be stricter: 10 digits, area code shouldn't start with 0 or 1.

Phone validation rules:

- Contains only valid characters

- 7-15 digits after stripping formatting

- No obviously fake patterns (000-000-0000, 123-456-7890)

- Consistent format within the file (or flag inconsistency)

Emails need both format validation and domain validation. The format check catches obvious errors: missing @ symbol, spaces, invalid characters. Domain validation confirms the domain actually exists and can receive mail.

Email validation rules:

- Contains exactly one @ symbol

- Has text before and after the @

- Domain has a valid TLD (.com, .org, .co.uk, etc.)

- No spaces or invalid characters

- Domain has MX records (if you want to verify deliverability)

Don't over-engineer email validation with complex regex. The edge cases in valid email addresses are weirder than you'd think, and most "strict" patterns reject legitimate addresses. Basic structural checks plus domain verification catch the real problems.

Row-Level vs. Dataset-Level Checks

Some rules apply to individual rows. Others apply to the file as a whole.

Row-level validation checks each record independently:

- Does this row have all required fields?

- Are the values in valid ranges?

- Do the fields pass format validation?

Dataset-level validation looks at the file as a whole:

- Are there duplicate primary keys?

- Is the row count within expected bounds?

- Are there unexpected patterns (same value repeated across all rows, sequential IDs with gaps)?

- Does the distribution look reasonable (not 99% of values in one category)?

Dataset-level checks catch problems that row-level checks miss. A row with customer_id "12345" is valid on its own. Two rows with customer_id "12345" is a duplicate problem. You only see it when you look at the whole file.

Useful dataset-level rules:

- Uniqueness: Primary key columns should have no duplicates

- Completeness: Critical columns should have < X% null values

- Cardinality: Categorical columns should have a reasonable number of distinct values

- Row count: File should have between N and M rows (catches truncated exports or runaway appends)

- Cross-field consistency: If "country" is "USA," then "state" should be a valid US state

Automating Validation

Manual validation doesn't scale. If you're checking CSVs by hand, you'll skip it when you're busy, miss things when you're tired, and apply rules inconsistently.

Automation options, from simple to sophisticated:

- Spreadsheet formulas: For small files, you can build validation into Excel or Google Sheets. Conditional formatting highlights problems, and helper columns flag specific rule violations. It's manual to set up but reusable.

- Scripts: Python with pandas, R, or even bash scripts can validate CSVs against defined rules. Write once, run on every import. The code becomes your documentation of what "valid" means.

- Database constraints: If the CSV is heading into a database, let the database enforce rules. NOT NULL constraints, CHECK constraints, foreign keys, unique indexes. The import fails if the data violates constraints—which is exactly what you want.

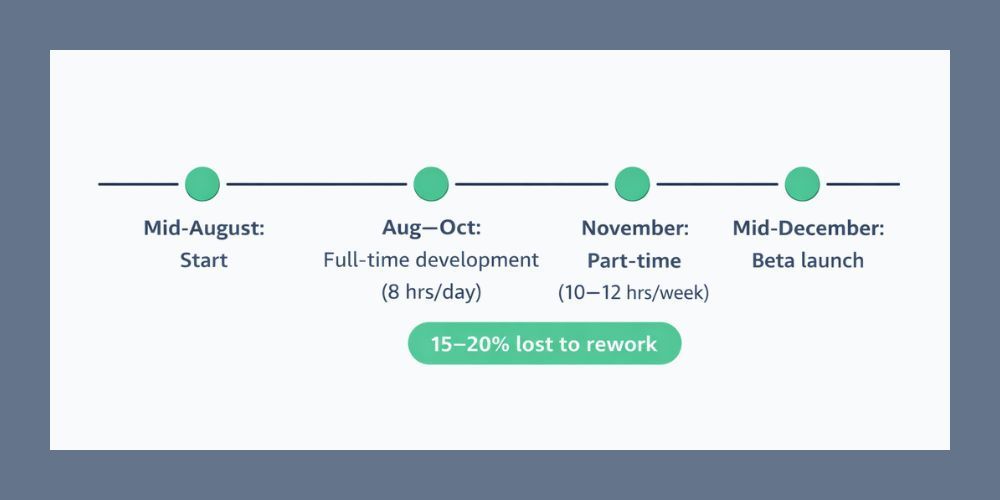

- Dedicated tools: Data quality platforms and ETL tools often have validation built in. CleanSmart validates structure, formats, and field rules automatically when you upload a CSV—you get a report of everything that failed before you decide whether to proceed.

The best approach depends on your volume and complexity. One CSV a week? A spreadsheet template might be fine. Dozens of files from multiple sources? You need automation.

Building Your Validation Checklist

Start with these defaults and customize for your data:

Structure checks:

- All required columns present

- Column names match expected (exact match or mapped)

- No unexpected extra columns

- Row count within expected range

Type checks:

- Numeric columns contain only numbers

- Date columns contain parseable dates

- No mixed types within columns

Value checks:

- Required fields are not null/empty

- Numeric values within valid ranges

- Categorical values in allowed lists

- String lengths within bounds

Format checks:

- Emails are valid format

- Phone numbers match expected pattern

- Dates in consistent format

- URLs are well-formed

Integrity checks:

- Primary keys are unique

- Foreign keys exist in reference data

- Cross-field relationships are consistent

Not every check applies to every file. But having the list means you're making conscious decisions about what to validate rather than hoping for the best.

Start Validating

Upload a CSV to CleanSmart and get an instant validation report. Every column gets type-checked, every field gets format-validated, and every row gets flagged if something's off. You'll know exactly what's wrong before you decide whether to clean it or reject it.

What should I do when a file fails validation?

It depends on what failed and why. For minor issues—a few malformed phone numbers, some dates in the wrong format—you can often clean the data and proceed. For structural problems—wrong columns, completely wrong data types, massive duplicate counts—reject the file and go back to the source. The validation failure is telling you something's wrong with the upstream process, and patching the symptoms doesn't fix the cause. Ask why the export produced bad data rather than just fixing it every time.

How strict should validation rules be?

Strict enough to catch real problems, loose enough to not reject legitimate data. Start stricter than you think you need, then loosen rules when they generate false positives. It's easier to relax a rule that's blocking good data than to tighten one after bad data has been flowing through. For critical systems (financial data, healthcare, anything regulatory), err on the side of too strict. For exploratory analysis, you can afford more flexibility.

Should I validate CSVs that come from internal systems?

Yes. Internal systems produce bad data too—maybe more than external sources, because there's an assumption of trust. Database exports get truncated. ETL jobs fail silently. Someone changes a field definition without updating downstream processes. Validate everything, regardless of source. The five minutes it takes to check is always worth it compared to the alternative.

William Flaiz is a digital transformation executive and former Novartis Executive Director who has led consolidation initiatives saving enterprises over $200M in operational costs. He holds MIT's Applied Generative AI certification and specializes in helping pharmaceutical and healthcare companies align MarTech with customer-centric objectives. Connect with him on LinkedIn or at williamflaiz.com.