CSV Files: The Good, The Bad, and The Messy

You've probably opened hundreds of CSV files in your career. Maybe thousands. They're the cockroaches of data formats—indestructible, everywhere, and somehow still thriving despite better options existing.

That's not entirely an insult. Cockroaches survive because they're simple and adaptable. So do CSVs.

But anyone who's spent an afternoon wrestling with garbled characters, mysteriously merged columns, or dates that Excel decided to "helpfully" reformat knows the dark side. CSV simplicity is a double-edged sword. The format's flexibility means there's approximately zero enforcement of standards, and that freedom creates chaos at scale.

Let's talk about why CSVs became the default, what goes wrong with them, and what you can actually do about it.

Why CSVs Won

The CSV format—comma-separated values—dates back to the early 1970s. It predates the IBM PC. It's older than Microsoft Excel by over a decade.

And it's still everywhere.

The reason is almost embarrassingly simple: a CSV is just text. Open one in Notepad and you can read it. No special software required. No proprietary format to decode. Just rows of data separated by commas, with line breaks between records.

This simplicity made CSVs the universal translator of data. Need to move customer records from one CRM to another? Export as CSV, import as CSV. Want to share data with someone who uses completely different software? CSV works. Building an integration between two systems that have never heard of each other? CSV is the common ground.

Every spreadsheet application, every database, every programming language, every analytics tool can read and write CSVs. That ubiquity is genuinely valuable.

But.

Where It All Falls Apart

The same "just text" simplicity that makes CSVs universal also makes them fragile in ways that aren't obvious until something breaks.

The Encoding Problem

Here's a fun experiment. Open a CSV exported from a European system on an American computer. Watch names like "François" become "François" or worse. Or import a file someone created in Excel on Windows into a Mac application and discover that every accented character has transformed into a tiny rectangle of confusion.

This happens because "just text" isn't actually that simple. Text files need an encoding—a system that maps characters to numbers. UTF-8 is the modern standard, but plenty of older systems default to Latin-1, Windows-1252, or something even more obscure. The CSV format has no way to specify which encoding it uses. So applications guess. And guessing goes wrong constantly.

The frustrating part? The file looks fine on the computer that created it. The corruption only appears when someone else opens it.

Excel's "Helpful" Auto-Formatting

Few things in data work inspire more quiet rage than Excel's automatic format detection.

You import a CSV containing product codes like "1-15" or "3/4" and Excel decides these are obviously dates. March 4th. January 15th. Your product codes are now permanently mangled, and the original values are gone—Excel doesn't store what you imported, just its interpretation.

Gene names in scientific research get the same treatment. MARCH1 and SEPT2 (actual human gene symbols) become calendar dates. This problem is so widespread that researchers have published papers specifically about it, and some gene naming committees have actually renamed genes to avoid Excel corruption.

The format doesn't even have to look like a date. Long numeric IDs get silently converted to scientific notation, losing precision. Leading zeros disappear from ZIP codes and product codes. Excel is trying to be smart, but it's destroying data in the process.

The Delimiter Dilemma

Comma-separated values. Simple enough. Except when your data contains commas.

An address field with "123 Main Street, Suite 400" breaks the parsing because that comma looks like a field separator. The standard solution is quoting—wrap fields containing commas in double quotes. But what if your data contains quotes? Then you escape them by doubling them up. What if your export tool doesn't handle this correctly? Then you get columns that shift unpredictably, data landing in the wrong fields, and hours of manual cleanup.

It gets worse internationally. In much of Europe, the comma is the decimal separator (€1,50 instead of €1.50), so many European systems use semicolons as the CSV delimiter instead. Export from a German system, import on an American one, and nothing lines up correctly.

The Line Break Lottery

Different operating systems use different characters to mark the end of a line. Windows uses two characters (carriage return plus line feed). Mac and Linux use just one (line feed). Old Mac systems used a different single character (carriage return alone).

Most modern software handles this gracefully. Most. Some tools will split your records in unexpected places or concatenate multiple records into one. A multiline address field can fracture into three separate rows if the software isn't handling line breaks within quoted fields correctly.

Real-World Chaos

These aren't theoretical problems. They show up constantly in actual business data.

Customer names with apostrophes that break field boundaries. Product descriptions containing quotes and commas in every combination. Phone numbers that Excel decided were really large integers and formatted in scientific notation. Date columns where January 2nd and February 1st are now indistinguishable because both got converted to "1/2/2023."

The worst part is inheritance. You didn't create the mess. Someone exported this file three systems ago, and each step introduced new problems. Now you're the one who has to make sense of it.

Prevention: Creating Clean CSVs

If you're generating CSVs, a few practices prevent most problems:

Always use UTF-8 encoding. It handles international characters correctly and is the closest thing to a universal standard. Most modern tools default to it, but check your export settings.

Quote all fields, not just the ones containing special characters. It's slightly larger files but dramatically fewer parsing failures.

Use ISO date formats. YYYY-MM-DD (like 2025-01-15) is unambiguous regardless of locale. January 15th and February 1st can't be confused.

Export numbers as plain text when precision matters. Leading zeros in ZIP codes, long ID numbers, anything where the exact string matters more than the numeric value.

Include a header row with clear column names. It seems obvious, but plenty of CSVs arrive without one, leaving the recipient guessing what each column contains.

Recovery: Fixing the Mess You Inherited

Prevention is great when you control the source. But you probably don't. You're dealing with files created by other systems, exported by other people, touched by applications you've never heard of.

Manual cleanup works for small files. A few hundred rows, you can fix encoding issues and reformat dates by hand. Tedious, but manageable.

At scale? Not realistic. A customer database with 50,000 records and encoding corruption throughout isn't something you can fix cell by cell. Neither is a product catalog where 200 SKUs got date-formatted into oblivion.

This is where automated cleaning becomes essential. Tools that can detect encoding mismatches and fix them. Systems that identify the date formats actually present in your data rather than guessing. Software that understands when "1-15" is a product code and not a calendar date.

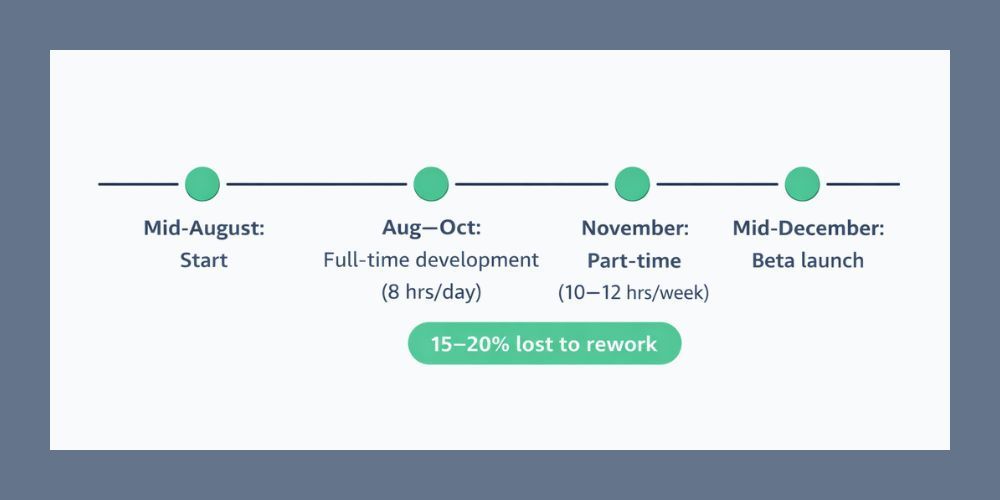

CleanSmart was built specifically for this kind of recovery work. Upload your problematic CSV, and the platform handles the encoding detection, identifies formatting inconsistencies, and lets you standardize everything before the data causes downstream problems.

The Uncomfortable Truth

CSVs aren't going anywhere. They're too useful, too universal, too embedded in how systems exchange data. Every integration, every migration, every data export will probably involve a CSV somewhere in the pipeline.

The format's simplicity is genuinely valuable. But that simplicity means quality control has to happen somewhere else—either in careful creation practices, automated cleaning tools, or painful manual repair.

You can't change what the format does. You can only deal with it better.

How do I know if my CSV has encoding problems?

The telltale sign is garbled characters where normal text should be. Names like "José" appearing as "José" or "Müller" showing up as "Müller" means the file was created in one encoding and opened in another. You might also see question marks, empty boxes, or strange symbols replacing accented characters, apostrophes, or currency symbols. If your data looks fine in one application but corrupted in another, encoding is almost always the culprit.

Can I stop Excel from auto-formatting my data?

Yes, but it requires importing the CSV through Excel's data import wizard rather than just double-clicking the file. Use Data → Get Data → From Text/CSV, then set problematic columns to "Text" format before importing. This prevents Excel from guessing that your product codes are dates. The catch: you have to do this every time you open the file. If you just double-click a CSV, Excel applies its auto-formatting immediately and the original values are gone.

When should I use a different format instead of CSV?

If your data contains complex nested structures, multiple related tables, or needs to preserve exact data types, formats like JSON, Parquet, or even a proper database export are more reliable. CSVs work best for flat, tabular data without too many special characters. That said, most business data exchange still happens via CSV because it's the one format everyone can read—so learning to handle its quirks is usually more practical than avoiding it entirely.

William Flaiz is a digital transformation executive and former Novartis Executive Director who has led consolidation initiatives saving enterprises over $200M in operational costs. He holds MIT's Applied Generative AI certification and specializes in helping pharmaceutical and healthcare companies align MarTech with customer-centric objectives. Connect with him on LinkedIn or at williamflaiz.com.